Introduction to Computer Programs: Native Code, Managed Code and the .NET Framework

In the past few years, we have witnessed exponential growth in the usage of electronic devices, ranging from desktop computers to mobile phones, to perform various operations. We rely on these machines for simple everyday tasks such as browsing the web, sending and receiving emails, or accessing social networks. Or to perform more specialised work, such as finding the optimal solution to a decision-making problem, simulating complex physical phenomena or determining the prime factors of an integer number.

Even though the electronic devices we use today look very powerful and "smart", it should be noted that they would not be capable of performing any of the above tasks unless instructed explicitly on how to do it. A program is a way to "teach" computers (and similar devices) how a given task should be performed.

In general, a sequence of operations devised to solve a particular problem, or to perform a given task is referred to as an algorithm. When an algorithm is translated into a language a computer can understand, it becomes a program. However, this process is more complex than it sounds. We will dive into how programs are encoded and executed in the remainder of this article.

Computer Architecture

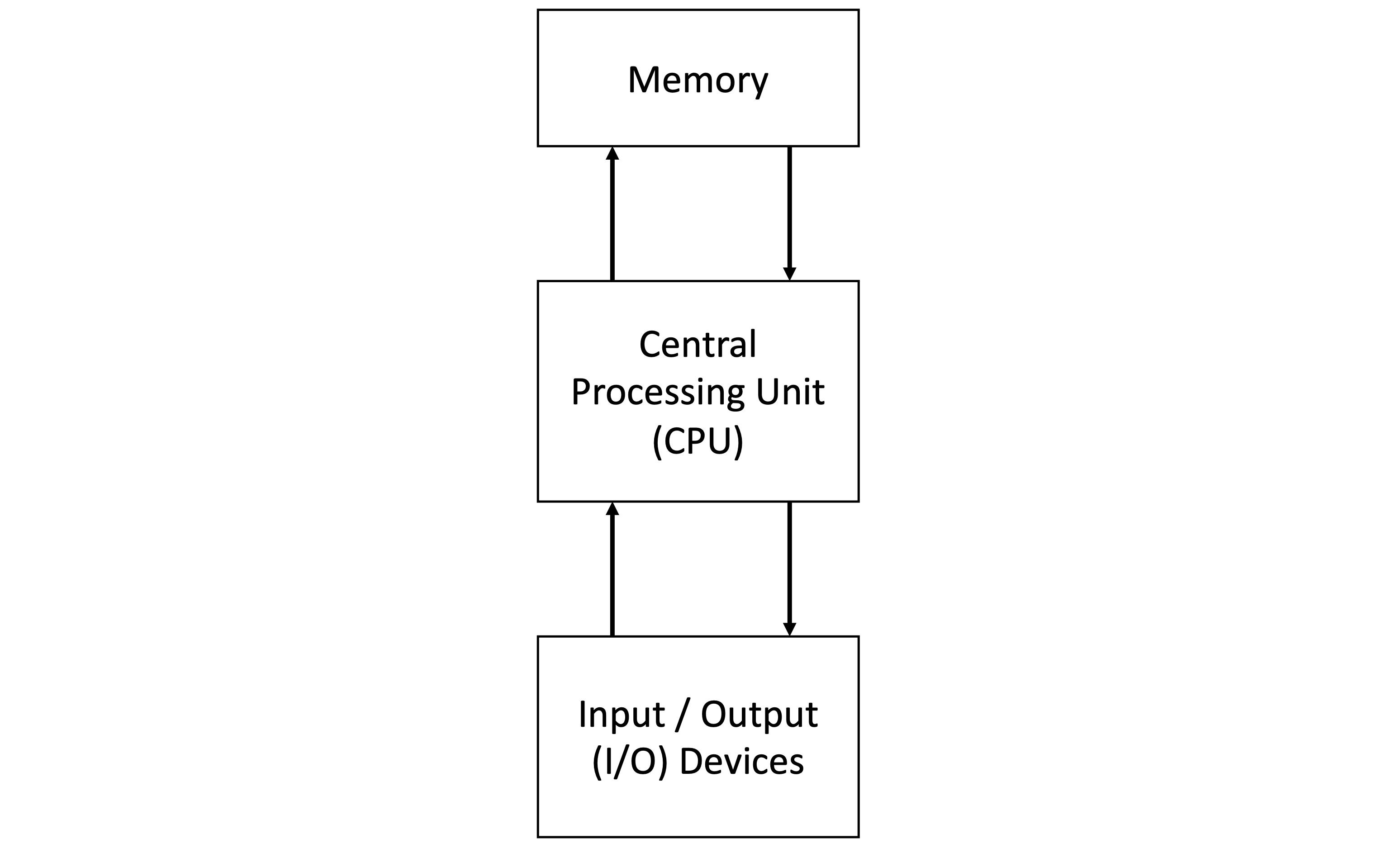

Almost all of the computing devices we use today are built according to the system architecture named after the mathematician John Von Neumann, who proposed it, together with others, in 1945. This is shown in the figure below.

At the core of the Von Neumann architecture are the Processor (CPU – Central Processing Unit) and the Memory (RAM – Random Access Memory). The operations associated with a computer program are called instructions. When a program is executed, its instructions are loaded and stored in the computer’s Memory with any data the program will process. The instructions are then sequentially fetched from the Memory and executed by the Processor.

In today's computing devices, these hardware components are built as digital circuits, i.e., electric circuits where the signal can be either of the two discrete levels 0 / 5 Volts. These voltage levels represent information by mapping 0V to the bit '0' and 5V to the bit '1'. Finally, these bits, also known as binary digits, are combined into sequences that are utilised to encode both the programs' instructions and the data to be processed.

In the past, computers were different. Ada Lovelace is recognised as the person who wrote the first computer program ever. The computer she used was a mechanical device—Difference Engine—invented by Charles Babbage in the 1820s. How her program was encoded differs from the one we use today.

Binary encoded instructions are also referred to as machine code. A sequence of binary digits univocally identifies each instruction. The voltage levels associated with those digits activate specific components of the CPU when a given instruction is loaded. This mechanism allows the CPU to perform arithmetic operations on data, such as addition, subtraction, and multiplication; data can also be loaded, copied and moved from different memory locations. The CPU performs these operations by first loading the data to be processed into its internal storage areas called registries.

For example, the animation in the following figure shows a sequence of CPU instructions that adds the content of memory locations 21 and 22. The CPU first loads data from those memory locations into its internal registries R1 and R2. When the addition is performed, the result is temporarily stored inside the registry R1 and copied to the destination, i.e., the memory location 24.

Now, you may wonder how complex programs can solve the challenging problems mentioned at the beginning and can be developed using those elementary instructions. Whilst this would not be impossible, it would still be very complicated and require considerable effort.

First, a programmer would have to be familiar with the set of instructions of the CPU on which their program will run. Then, after designing an algorithm for the problem they want to solve, they would have to encode it using such a set of low-level binary machine instructions. Finally, they would need to manipulate the data on which those instructions operate by explicitly referring to addresses of the memory and registries of the CPU.

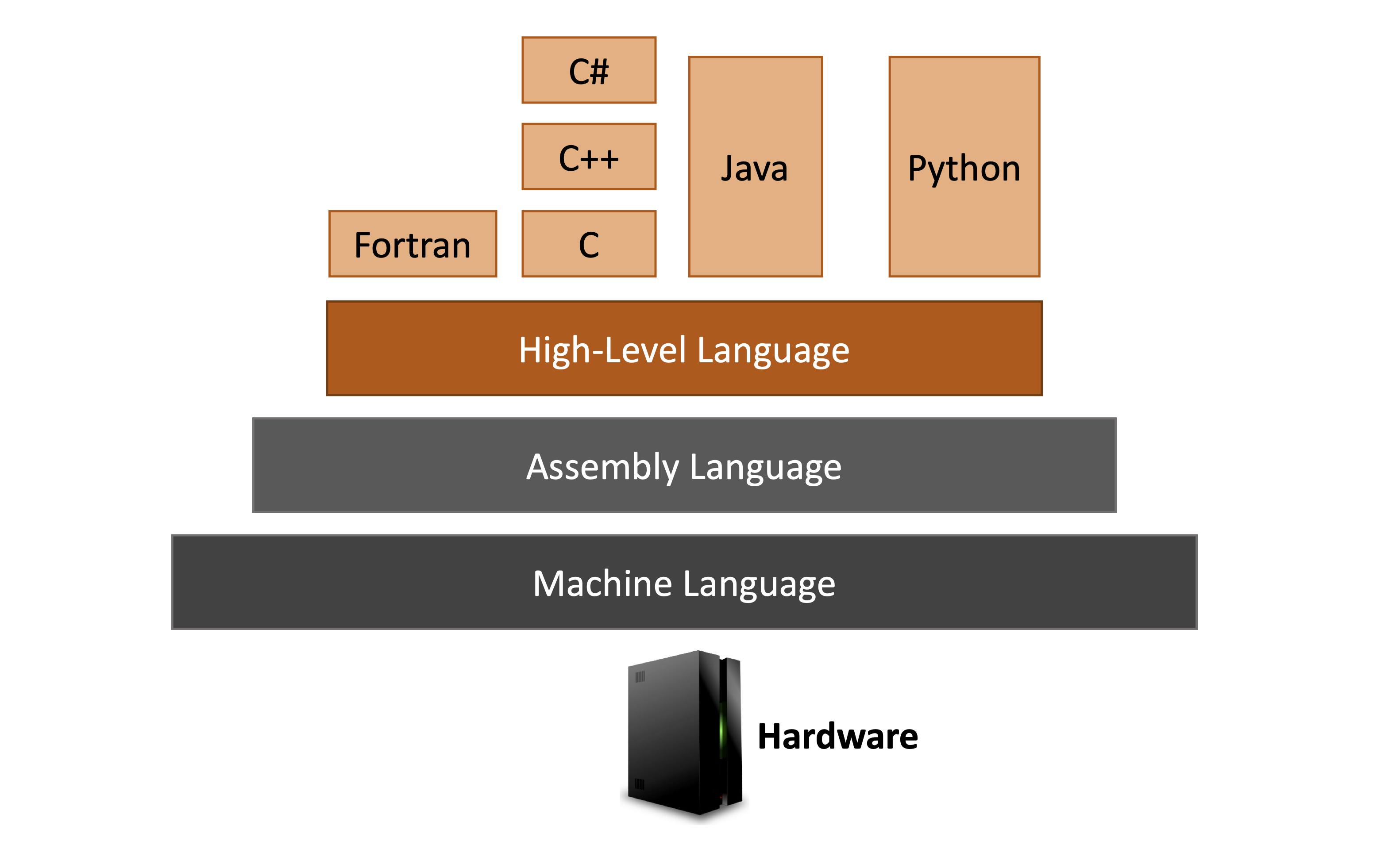

Luckily, today's programming languages provide different abstractions that allow software developers to encode their algorithms using a high-level approach. In this way, the raw manipulation of bits and the direct exposure of the details of the underlying hardware is no longer required.

Programming Languages

Programming languages provide a notation for writing programs in a way that, compared to machine code, is closer to human natural languages. As the Figure below shows, they can support different levels of abstraction according to how close they are to the hardware (at the bottom) or human natural languages (at the top).

No matter what the provided abstraction is, a computer can only understand and execute the machine code of its CPU; hence, conversion of any high-level program specification into the equivalent binary code is required before the actual program execution can occur. Particular pieces of software, known as compilers and interpreters, specifically deal with the above conversion. In this article, we will focus on the approach used by compilers but it should be noted that there can be different approaches in how the compiling process is carried out and in the structure of the generated output code. We will now introduce the concepts of native code and managed code.

Native Code

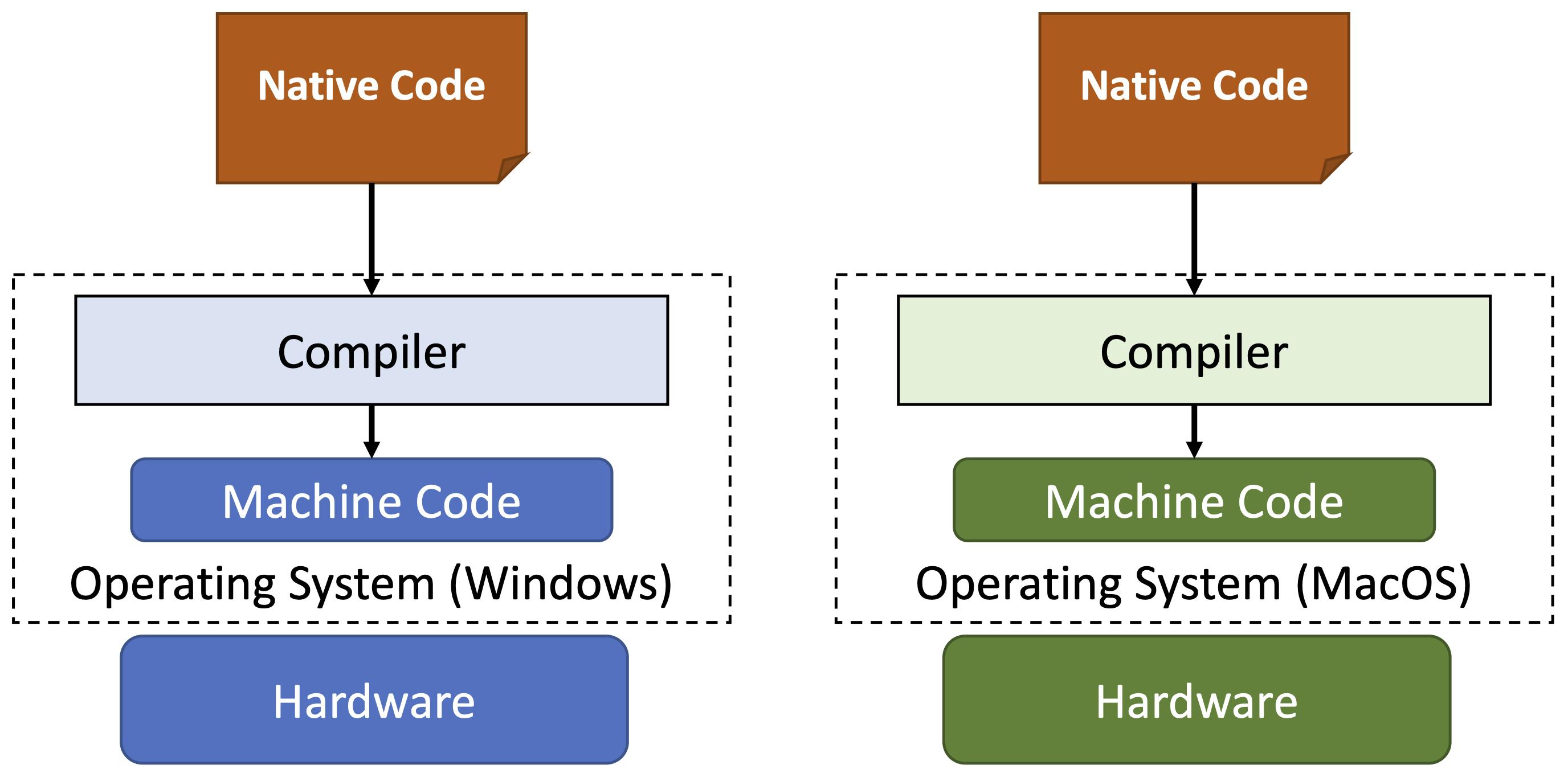

A high-level program specification is given as input to a compiler that translates the native code into the machine code of a specific CPU—usually the one of the system where the compiler is running. The generated code will be specific to that hardware and only work on that system. For instance, looking at the figure below, when taking the same native code as input, the machine code generated by the light blue compiler will only run on the blue hardware. Similarly, the machine code generated by the light green compiler will only run on the green hardware.

The green and blue machine codes are not interchangeable: each compiler generates explicitly native machine code that is customised (and optimised) to run on that particular system. We say that the generated machine code is not portable. This happens when you compile a C or C++ program, for example. Machine code does not require further runtime conversions, so its execution is performed by the Operating System directly, and it is usually faster than the intermediate language code discussed in the next part of the article.

Managed Code and Intermediate Code

The term managed code refers to programs—typically written in high-level languages like C# or Visual Basic, for example—that when compiled are not translated into executable machine code. Instead, they are compiled into an intermediate language (IL) and executed by a runtime that provides services like automatic memory management, security, and exception handling.

The IL is an intermediate low-level binary code not coupled to specific hardware. It targets an application virtual machine that will translate it into the actual native code at runtime. Although different application virtual machines can exist for various hardware architectures and operating systems, this approach allows the same intermediate code to run seamlessly on any virtual machine without needing any customisations. Moreover, as mentioned, the program execution is restrained within a self-contained environment that provides automated memory management, increasing safety and decreasing the likelihood of crashes on the host machine.

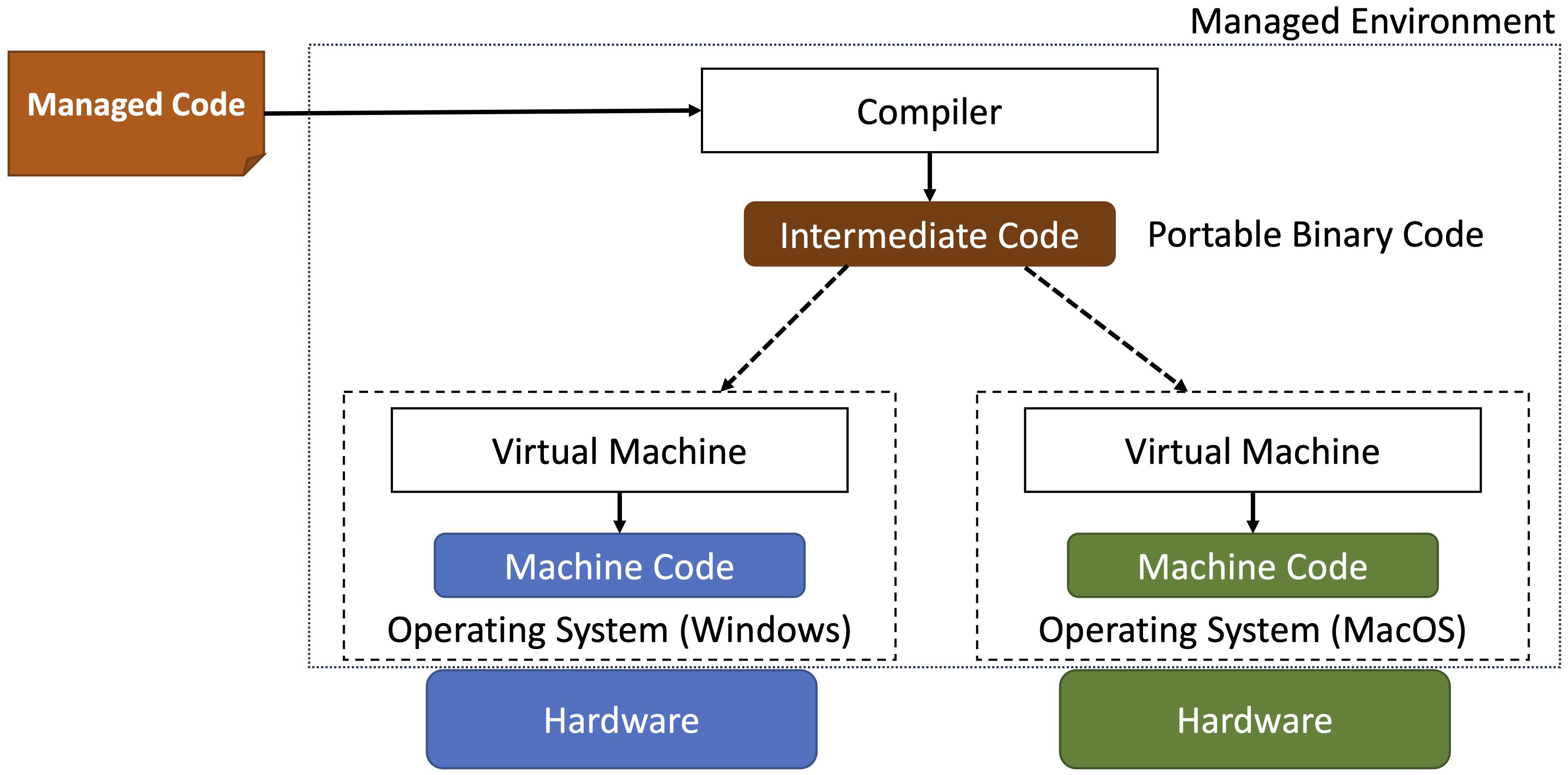

The figure below shows two types of systems (blue and green) with their application virtual machines. The managed code is first translated into the intermediate code by a compiler. Then, the intermediate code is provided as input to an application virtual machine that—at runtime—generates the executable machine code for the hardware and operating system where the program will run.

.NET (and Java) uses this approach, allowing inherent code portability compared to native code. On the other hand, an additional conversion is required during the program execution, resulting in slightly lower performance compared to native code.

Generally, a method called just-in-time (JIT) compilation is used, where machine code is produced when a set of intermediate code instructions (like a function) is executed for the first time. Another method involves interpreting the intermediate code directly without converting it to runnable machine language. Some .NET (and Java) Software Development Kits (SDKs) offer tools to create precompiled code using ahead-of-time (AoT) compilation to enhance the startup time of applications.

Please note that we used the terms 'interpreter' and 'interpreting' earlier. There was a clear distinction between compilers and interpreters in the past, but the difference between them has become more blurred in recent times. An example of an interpreter is a command line interface implemented via a shell, such as the Bash. An interpreter is a piece of software that takes a high-level program specification as input and executes the instructions sequentially without producing any machine code as output. As explained earlier, application virtual machines might use a similar approach. Still, the code they execute is compiled into a low-level binary format, which brings significant performance optimisation to the traditional interpreter model.

.NET Framework

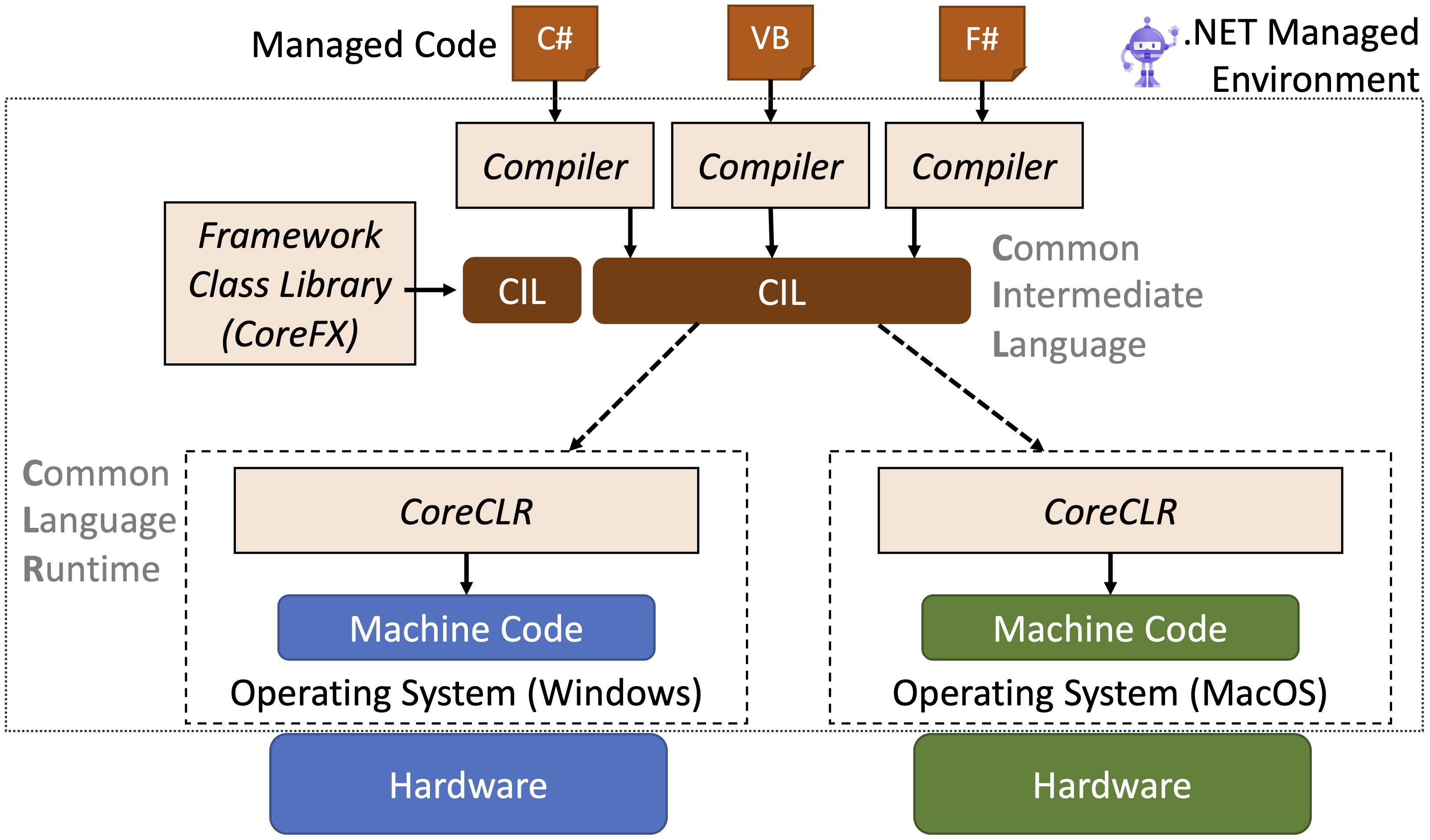

.NET is a software stack designed by Microsoft that is built on the above concepts of managed code, intermediate code, and application virtual machines. As shown in the figure below, different languages like C#, F#, or Visual Basic can be used in .NET to write programs. These are then compiled to produce intermediate code—Common Intermediate Language (CIL)—that can run transparently on any .NET virtual machine.

The .NET component (application virtual machine) that runs the CIL code is the Common Language Runtime. The other essential components of the .NET framework are the compilers that generate that code and a set of ready-to-use library functionalities known as the Framework Class Library. A library can be seen as a pre-compiled piece of intermediate language code that provides a specific functionality. Functionalities provided by the libraries can then be used by other programmers in their code without reimplementing that functionality from scratch.

An example of this is the Console.WriteLine() instruction that allows messages to be printed on the console terminal of an Operating System. This instruction can be used in a program without requiring the programmer to understand how the Operating System manages the terminal. This is a crucial concept related to libraries. By using the Console.WriteLine() instruction, the programmer does not need to know how messages are encoded and streamed to the terminal. This has already been solved and provided to programmers as one of the Framework Class Library functionalities. The principles of reusing code and modularity provided by libraries are essential for designing complex software systems that can be easily maintained.

The next article will describe how managed code programs can be written using the C# language, part of the .NET Framework.